OpenAI launched its deep research feature in February of 2025. Unlike previous versions of ChatGPT, deep research doesn’t just compile information from webpages. Instead, it looks for and cites multiple reliable sources.

Depending on the complexity of the question, deep research can take anywhere from 5 to 30 minutes. It’s perfect for tasks that require researching multiple websites to get an accurate, complete answer.

I personally use ChatGPT’s deep research feature nearly once every week. It’s excellent at data synthesis and helping me find information from the dustiest corners of the internet. What would’ve taken me days takes it minutes (it’s scary, sometimes, honestly).

But deep research, like other OpenAI models, can also hallucinate. The chances reduce with deep research, but it’s not zero. It’s been famous for making up links, stats, and facts. Misinformation can be harmful in the worst-case scenario (in scientific and academic fields) and erode brand trust in the best-case scenario.

So I put it to the test. Here are 10 experiments I ran with ChatGPT’s deep research to verify whether it provides accurate and helpful information.

{toc}

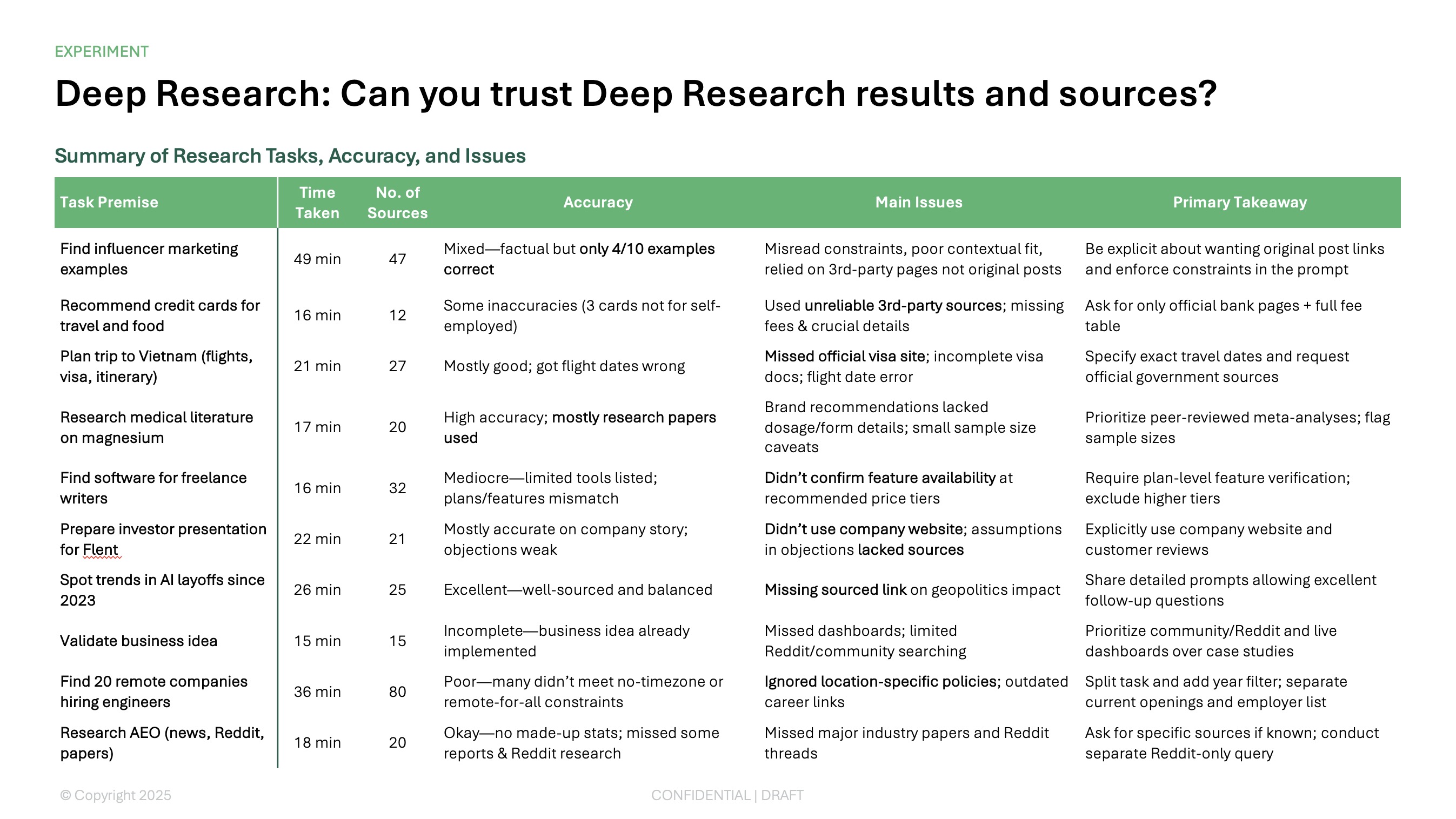

The TL;DR: Here’s a summary of the findings

Making up links or facts wasn’t actually the most common mistake deep research made in this experiment. Instead, the most common errors were:

- Not presenting accurate, real-time information

- Failing to abide by one or two of the constraints set

- Poor quality when the query is too long with multiple tasks

Here’s a table summarizing all the experiments and findings:

1: Finding unique influencer marketing examples

Premise: I asked ChatGPT to find some out-of-the-box influencer marketing examples for an article I was writing. I requested it to search for collaborations that feel unexpected—like a tech brand partnering with a skincare influencer. The caveat was that all examples should be from U.S.-based small businesses and no older than 2024.

Time taken: 49 minutes

Number of sources evaluated: 47 sources

Accuracy: The accuracy was there, no hallucinations. But out of the 10 examples I had requested, ChatGPT got only four right.

There was a lack of contextual understanding and an overblown focus on micro-influencer partnerships rather than unique partnerships.

Two examples of the deep research shared were pet foods partnering with pet brands, which directly contradicted my initial prompt.

Overall result quality: Although the result was factually accurate, I was disappointed with the results for this query. Here’s why:

- ChatGPT didn’t understand the context and showed examples that were the opposite of what I had requested

- All the links referenced were to third-party websites instead of the collaborations’ actual posts. I had to dig through and find the original source for each example

- I had expressed a preference for micro-influencers when ChatGPT asked follow-up questions, but the tool focused on it too much and cited micro-influencer collaboration examples instead of one that’d meet my core requirement

- Multiple cited sources were of poor quality. They didn’t link back to original examples or elaborate on the partnership details, making me question the authenticity of the results

Other observations and learnings: ChatGPT got the first two examples right, which makes me think a lack of data is behind the poor quality results. Plus, I have reason to believe deep research isn’t able to scrape from recent web sources. An article that matched the requirement to a T was published two months ago (written by yours truly), which wasn’t referenced anywhere in its sources.

Another thing I think I could’ve done better is specify that I’m looking for the actual Instagram, TikTok, or YouTube link where the collaboration is live.

I asked this in a follow-up query. Deep research found accurate links for five out of the 10 examples it cited earlier, but the rest were just irrelevant or didn’t match the description provided earlier.

For one vague example, it couldn’t find a link at all and accepted that instead of making up a link, which I appreciated.

2: Evaluating credit cards

Premise: I used deep research to find credit cards in India that would help me maximize my spending on travel and food. I shared which cards I currently have and what I’m looking for in a new credit card. In the follow-up questions, I also specified that I’m self-employed, so it doesn’t recommend cards that are only given to salaried folks.

Time taken: 16 minutes

Number of sources evaluated: 12 sources

Accuracy: Some of the information was made up. Of the 10 cards ChatGPT recommended, three aren’t available to self-employed people. But the tool still explicitly said it’s open to all and included an income ceiling that applies only to salaried people.

Overall result quality: I liked the concise way of presenting information in this experiment. But for a few cards, crucial information (such as joining and renewal fees) was missing. I would’ve appreciated all the details in a table format, so I could evaluate the critical factors and benefits at a glance.

Another drawback was the lack of options. I already know multiple cards that would be a good fit for my needs that were absent from this list. It didn’t feel like deep research with only 10 cards, one of which I already had (and ChatGPT knew about it, too). There are far superior options in the market. But I wouldn’t have known that if I'd relied only on ChatGPT.

Other observations and learnings: ChatGPT didn’t use reliable sources for this experiment, which was a bit concerning to me, given the financial nature of the query. All banks have detailed landing pages for each of their credit cards, but the tool only linked to the bank’s website for three cards. For the rest of the suggestions, deep research cited a third-party website.

This was also why it recommended three cards that aren’t actually open to self-employed people (the third-party site had inaccurate information).

I would’ve preferred ChatGPT to use only official bank websites to share information, and maybe complement that with Reddit or other social sites to help me understand real users’ experiences with these cards. Maybe specifying this preference in the initial query would’ve helped.

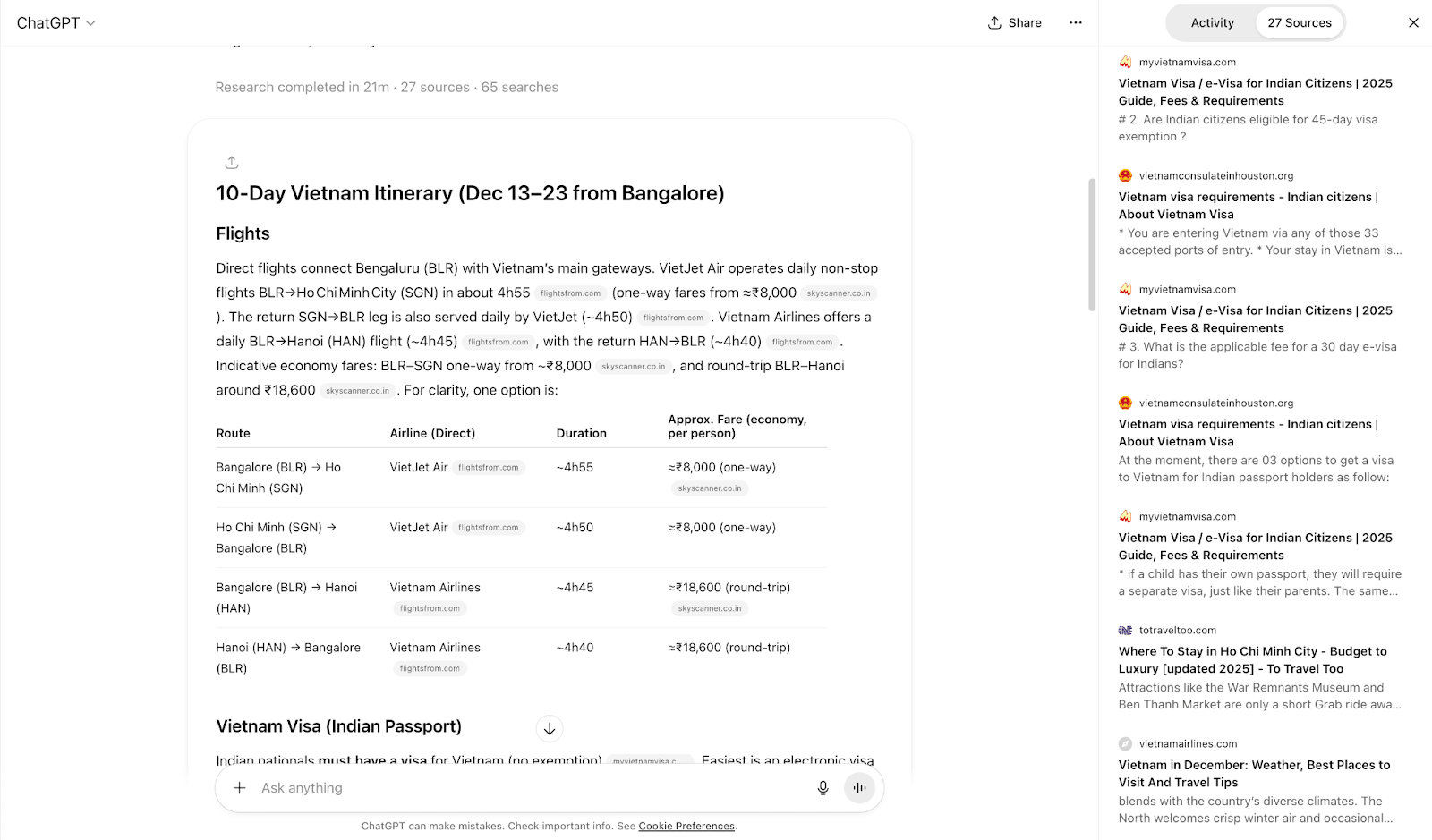

3: Planning an international trip

Premise: I used deep research to plan a year-end trip to Vietnam. I asked ChatGPT to search for flight options, the visa process, and prepare a rough itinerary for my vacation. I also requested it to avoid areas where it might rain, based on forecasts and seasonal patterns.

Time taken: 21 minutes

Number of sources evaluated: 27 sources

Accuracy: For the flights, ChatGPT completely fumbled the date and instead of giving me flight options for December, it showed me flights for November 🫠

The visa details were accurate, but it didn’t specify the documents you’d need (like your trip details, address, and passport validity).

The itinerary was perfect, followed all the restraints I had set, and recommended great options to stay, too (although I do wish it offered more choices).

Overall result quality: Except for the flight inaccuracy, this experiment was pretty close to 100% successful. I would’ve loved more details on visa and hotel options as mentioned previously. All that said, this was a pretty great first result.

Other observations and learnings: I noticed, like before, that ChatGPT didn’t source the official visa website at all in its deep research. It relied on third-party websites only. These are accurate, sure, but I had to verify all of that from the official site. If you’re planning an international trip, it’s wise to ask the tool to specifically check official websites regarding information (in case third-party sites aren’t updated or accurate).

I also think the output would’ve been better had I specified how many stay options I require for each place. If you already know multiple sites to evaluate for stay options, ask ChatGPT to search them as well. Right now, it only searches for two or three websites at most to show hotel options.

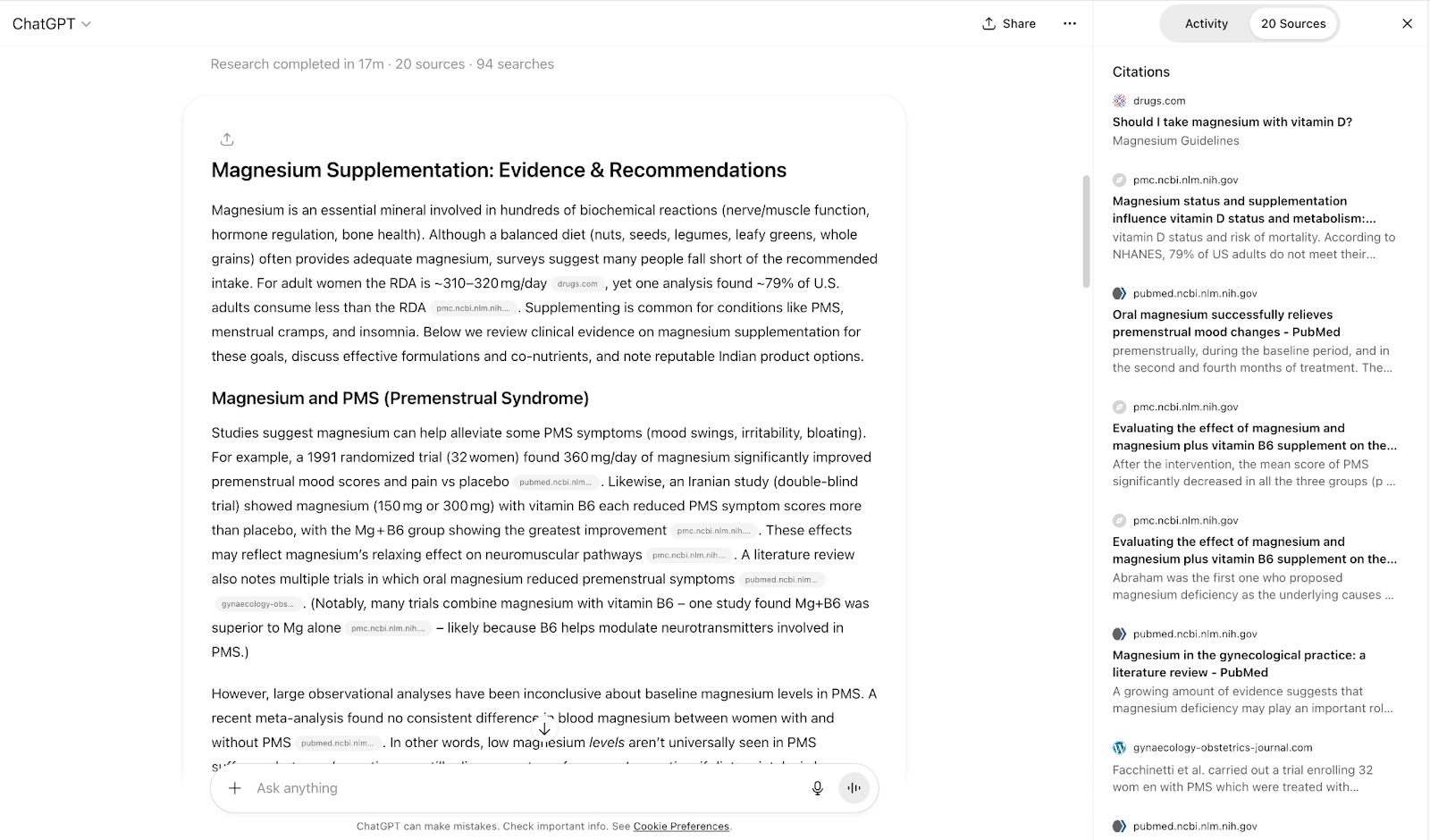

4: Digging through existing medical research

Premise: I asked ChatGPT to dig through medical papers and research documents to find the necessity of a magnesium supplement. I also elaborated on all the medications I’m currently on and requested a detailed report on the current magnesium supplement options available in the market.

Time taken: 17 minutes

Number of sources evaluated: 20 sources

Accuracy: The accuracy was spot-on here. ChatGPT cited various research papers and a couple of health websites to support its information, but I liked that it mostly relied on research papers.

The brand recommendations were subpar because they didn’t specify the magnesium content or whether each tablet contained a bioavailable form.

Overall result quality: The level of information was nearly perfect here, except for the brand recommendations. I didn’t like that the deep research used two random health websites (which weren’t very reliable) as two of its references, but that’s a minor flaw. A majority of the findings were from reliable research papers, literature reviews, and meta analysis.

It also presented contrasting findings, so I can make my own decision without being swayed in one direction. Overall, I have little to no complaints here.

Other observations and learnings: It helps a ton if you specify which sources you want ChatGPT to dig through. Because I had requested it to look through medical journals, deep research came through with a much more reliable report.

But a caveat here is that most of the referenced research papers had extremely small sample sizes (like 30 women), which wouldn’t make their findings particularly strong. ChatGPT specified the sample size for each study, but I also wish it had highlighted how small sample sizes can affect study findings.

Lastly, it didn’t go into detail about the brands and used a third-party website to recommend the companies. I’m guessing the tool's quality degrades toward the end if it’s been given multiple tasks. It’s better to give one detailed request first, then follow up with another. Here’s proof:

I’ll still say the number of brands it looked up was very, very few compared to the options available in the market. I had to explicitly ask it to evaluate at least 10 brands to get a detailed report.

⚠️ Note: Don’t take medical advice from ChatGPT, deep research or not. I had already tested my magnesium levels and was on a doctor-prescribed supplement at the time of running this research. I did this experiment to validate my results.

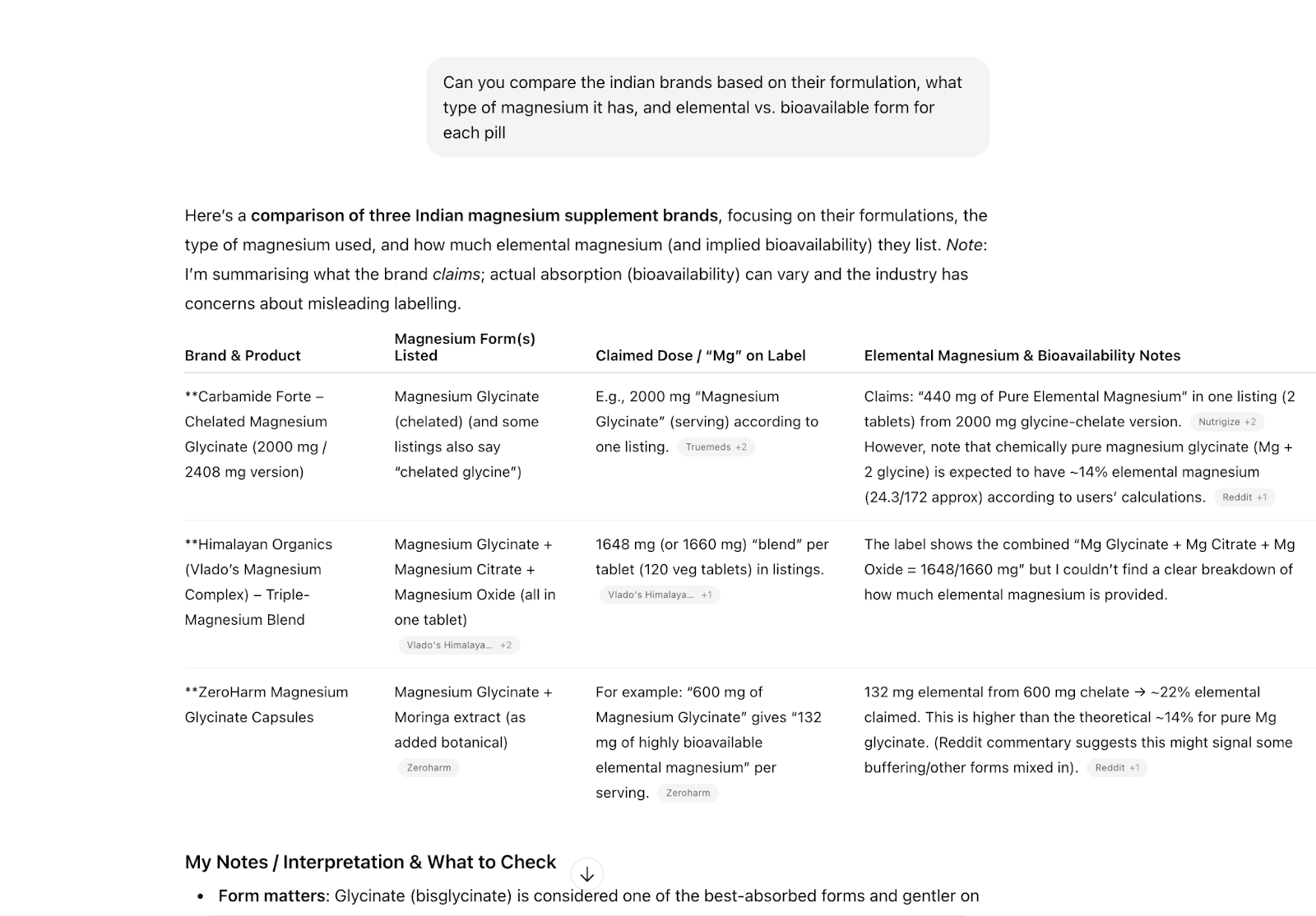

5: Examining multiple tools in a category

Premise: I asked ChatGPT to conduct a deep research about the project management tools available in the market for freelance writers. The constraint was the budget (no more than $20/month). I also requested it to monitor reviews, especially on Reddit, and to look for tools specifically designed for freelance writers. In features, I asked for tools that I can use to track my working time, send & track invoices, manage projects, and have data about my business.

Time taken: 16 minutes

Number of sources evaluated: 32 sources

Accuracy: I wouldn’t say the accuracy was A+ in this experiment. For starters, ChatGPT just found tools with the features I requested without checking if the plan it’s recommending (which meets my budget) actually has those features.

For example, Bonsai does have time-tracking and invoicing features. But you can’t see the split between billable vs. non-billable hours in the plan I can afford. And the basic plan also doesn’t have any invoicing features—it’s restricted to the higher-tier plans. ChatGPT did mention this, but just as a con in the detailed description. In my opinion, with the constraints I had placed and features I had requested, Bonsai shouldn’t have made the cut at all.

Similarly, Toptal and Fiverr Workspace don’t qualify because both of these tools have little to no project management features.

Overall result quality: To put it simply, I wasn’t impressed. Three out of five recommendations didn’t match my feature or budget needs. The descriptions were accurate, though, and this time the tool only used the official website as its source. I still think it left out a few tools that should’ve made the list, like Harlow (which I currently use).

Other observations and learnings: Again, I think it would’ve helped if I had specified how many tools I want ChatGPT to evaluate to have more options. I also think the output would’ve been better if I had included a list of negotiables and non-negotiables for the features.

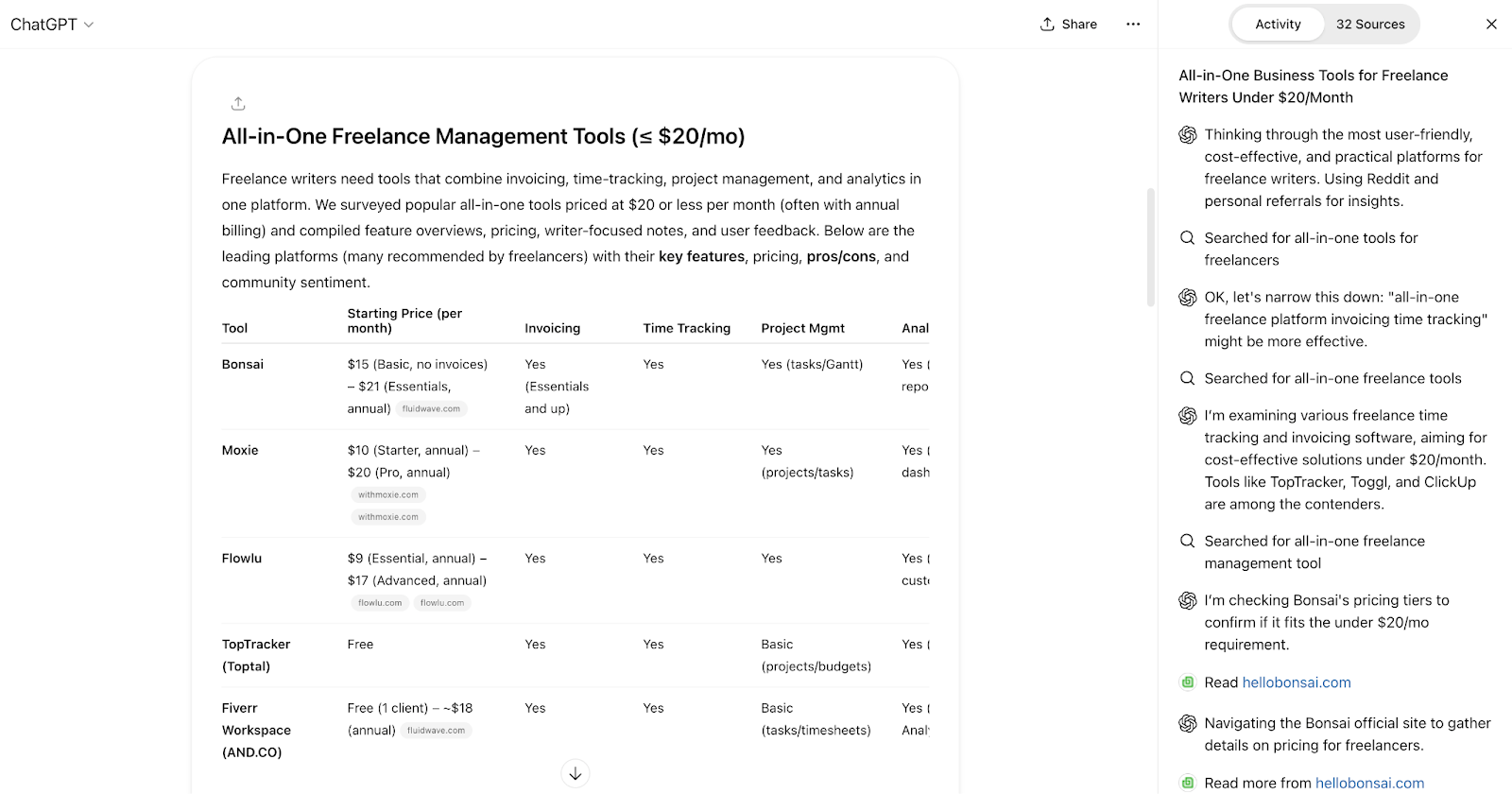

6: Gathering data for a presentation

Premise: I used deep research to help me create a detailed presentation for investors. I used the company Flent as an example and asked ChatGPT to analyze its business model inside and out and identify similar companies in new locations or industries. I also requested the tool to predict possible objections an investor might have and prepare answers for them.

Time taken: 22 minutes

Number of sources evaluated: 21 sources

Accuracy: The story about the company I was researching was 100% accurate, although I do wish the tool had presented the timeline a bit better. Deep research also found similar businesses that matched my requirements. The objections section was also accurate, but the arguments had some loopholes and made-up assumptions.

Overall result quality: The quality was good, although the sources were far fewer than I had hoped. ChatGPT didn’t research Flent’s own website to learn about its story and business model—it relied on news sites instead.

The potential investor objections section was lukewarm. The points it represented were strong, but the arguments didn’t carry much weight. For example, it said Flent’s market is insulated from market downturns because it primarily caters to tech and finance. There’s no breakdown of Flent’s customer base anywhere—I don’t understand where this industry lean is coming from.

Besides, most folks living in Bangalore (and other large cities Flent is planning on expanding to) are immigrants from other cities who might move out as work-from-home becomes more common. It doesn’t account for this objection either.

I also found it didn’t have any objections from the landlord’s perspective. What if they can make more money leasing directly? What if a competitor pops up and offers a better deal to both parties? What if the landlord wants to cut the contract short in the middle of the year—what happens to the tenants then?

Overall, beyond the objections section, the result was pretty good, just the sources weren’t diverse.

Other observations and learnings: It’s no surprise, but ChatGPT can’t think like a human does. In this case, I would probably find better results by raising another deep research query about investor objections with more specificity. It would’ve also helped to ask it to scour the hesitations from specific sources. I should’ve also asked to see more reviews about the company online, so I can see customer objections alongside ChatGPT’s predictions of what some investor arguments might be.

Pssst…if you’re gathering your presentation data using deep research, convert it to slides in a few clicks using Plus.

7: Spotting big-picture trends

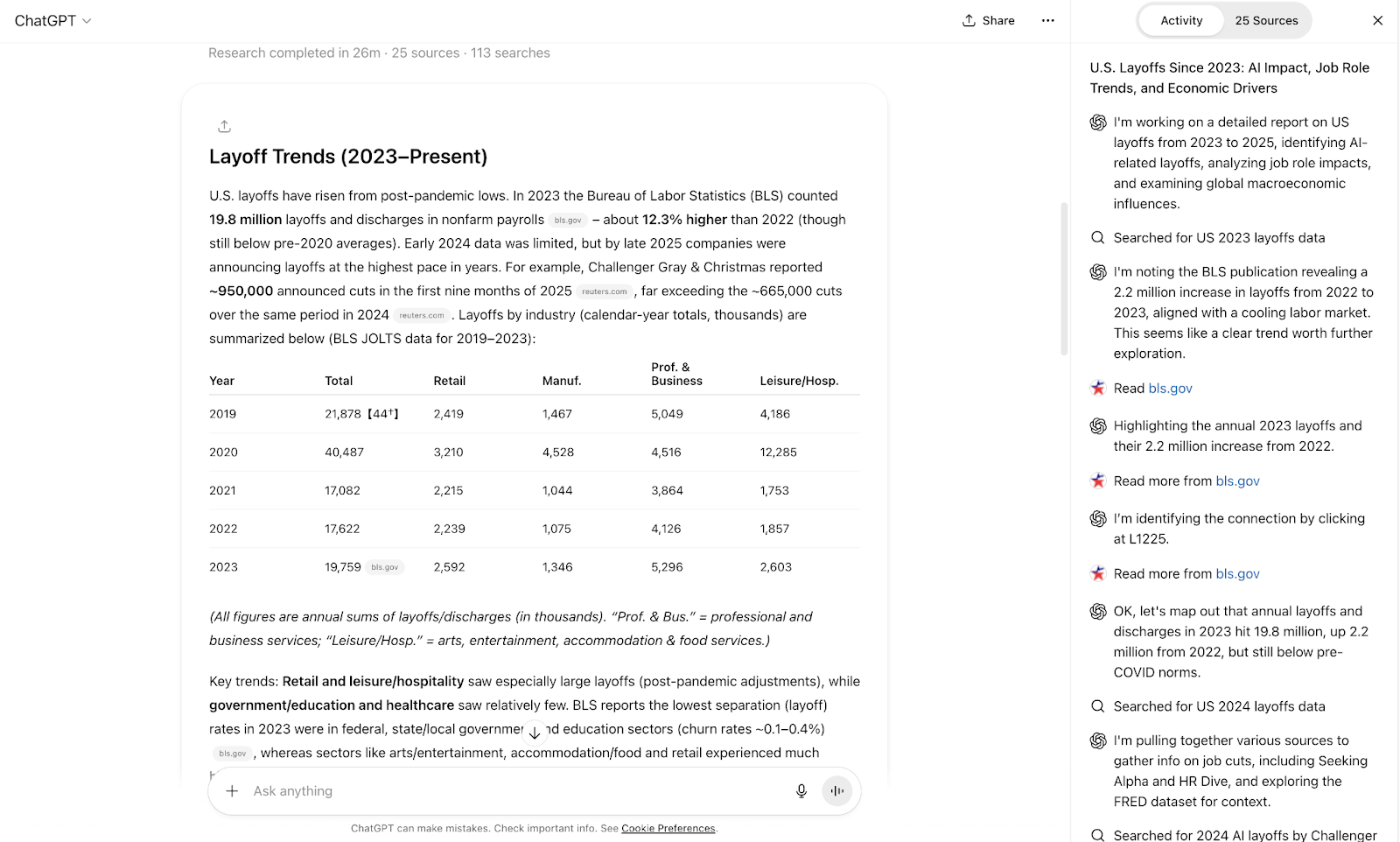

Premise: For the seventh experiment, I asked ChatGPT to conduct a deep research about the layoffs in the last two years (since 2023) due to AI. I wanted to find patterns in the most impacted vs. most stable jobs and see what experts predict will happen in the future. I also requested it to highlight how external economic factors have affected layoffs, so I get a complete picture.

Time taken: 26 minutes

Number of sources evaluated: 25 sources

Accuracy: This was another hit with 100% accuracy. Every claim in the report was tied to a reliable source and presented perfectly. I had asked the report to be longer than a summary but shorter than a full-length report in the follow-up question, and ChatGPT nailed that sweet spot. I just couldn’t find a deep research source explaining how global geopolitics impacted layoffs—not that it wasn’t true—but the tool didn’t cite any sources.

Overall result quality: I have no complaints in the quality of this experiment (and that’s a first!). It tied everything together well and met all the requirements I had set.

Other observations and learnings: I think what made this experiment so successful were the detailed and specific prompts. By sharing an in-depth prompt, ChatGPT asked excellent follow-up questions, resulting in an output that was exactly what I was looking for.

8: Validating a business idea

Premise: I asked ChatGPT to critique a business idea I was noodling with. The idea was to create an influencer marketing examples dashboard with filters for location, collaboration type, influencer size, etc. It’d be updated frequently to remain fresh and relevant. I wanted to know whether marketers actually need this product, or if a version of it already exists.

Time taken: 15 minutes

Number of sources evaluated: 15 sources

Accuracy: The findings presented were accurate, but quite incomplete. For instance, it said no such products currently exist in the market, when I already knew about a dashboard that exists. It matches the business idea I shared perfectly, so I don’t know why deep research wasn’t able to find it. The sources cited were accurate though, no made-up links.

Overall result quality: Subpar. Instead of finding live dashboards of influencer marketing examples, it linked to case studies of influencer management software. And despite my asking to scour influencer marketer communities and Reddit threads, it came up short of any relevant threads.

Other observations and learnings: I noticed that ChatGPT barely did any research on Reddit, communities, or social media sites, even though I had asked it to. Perhaps the result would’ve been better had I reserved that request for a follow-up question. But the inability to find an existing dashboard eroded my trust.

I liked the argument that deep research showed that marketers can technically find collaboration examples using influence-discovery software, since these tools list a creator’s previous collaborations. Other than that, I didn’t learn anything new.

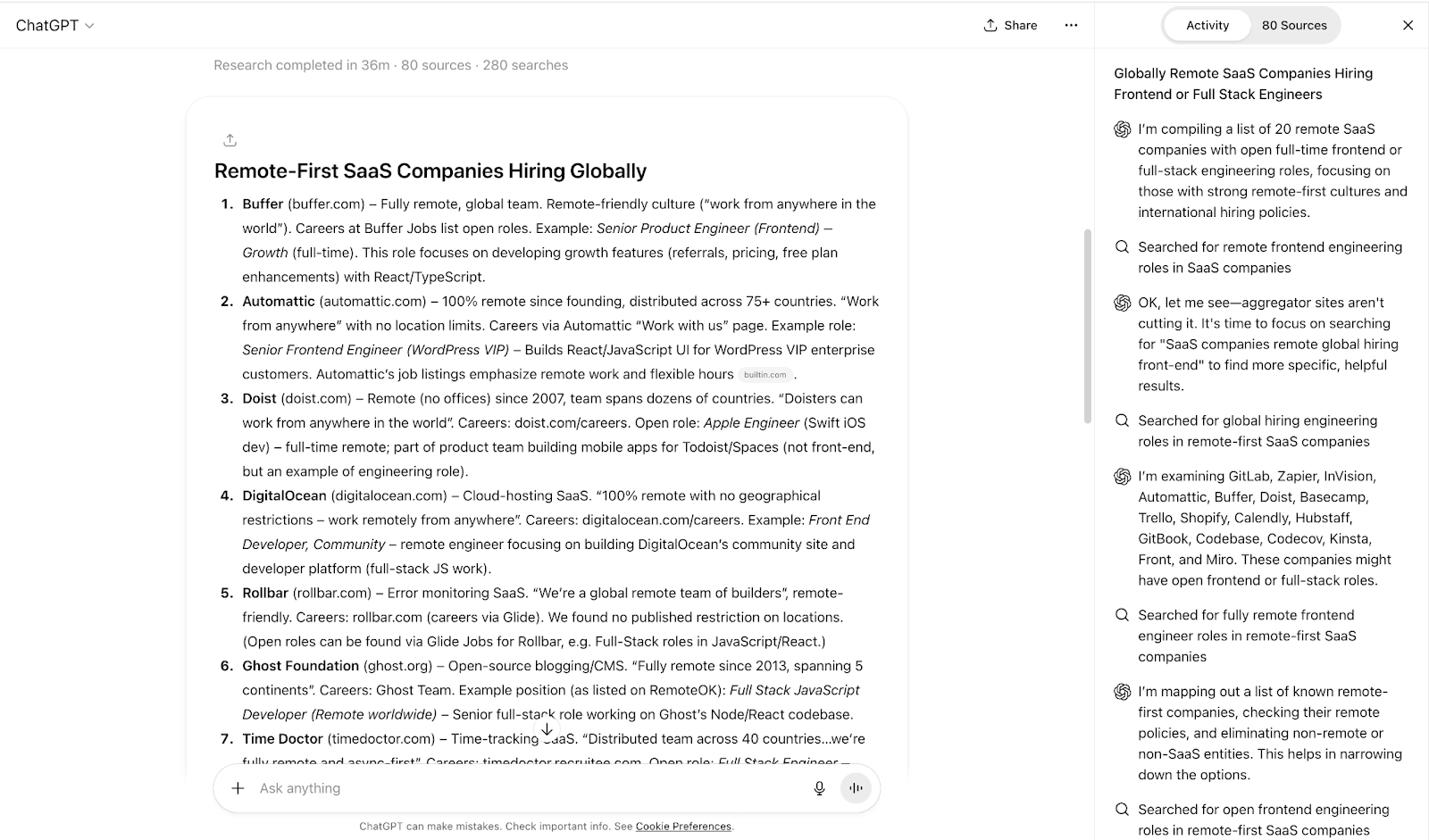

9: Hunting for relevant job opportunities

Premise: This time, I kept it simple. I asked ChatGPT to find 20 remote companies that allow their employees to work from anywhere (no US-only restrictions) and are currently hiring software engineers. To make it a little harder, I requested only companies with no time restrictions on employee availability, but I fully expected it to fail at this particular sub-task.

Time taken: 36 minutes

Number of sources evaluated: 80 sources

Accuracy: Of the 20 companies, 14 didn’t meet the requirement. Eight were remote, but only in specific locations like the U.S., Argentina, Canada, or Bangalore. One wasn’t remote but hybrid. One company was acquired by a parent company that isn’t at all remote. Two companies had no details on whether they’re remote, hybrid, or in-office. Two were fully remote but had overlapping working-hour requirements.

It wasn’t such a bummer that ChatGPT couldn’t find fully remote companies; it was more that it didn’t mention caveats like location-specific restrictions. The tool simply said eight companies hire globally, when they just…don’t. 🤷

Instead of fumbling with accuracy, I would’ve preferred ChatGPT to list fully remote companies like Plus and Zapier, even if they aren’t hiring engineers right now. That would’ve still made the list useful if someone wanted to keep track of when relevant roles open for them.

Overall result quality: The overall presentation of the findings was also not of the best quality. For starters, the tool didn’t link or cite the actual careers page of these companies. Instead, it just ‘wrote’ the URL where I can see the open roles (like see careers at: companyname.com/careers). It also pulled outdated quotes from companies' career pages.

Other observations and learnings: I designed the prompt to be a little unrealistic (no timezone restrictions) because I thought the initial request was too easy for deep research.

But I think adding three tasks to a single deep research query (software engineer roles, fully remote, no time zone restrictions) actually threw the tool for a loop, and it returned inaccurate results.

I also think it would’ve helped if I'd added the year to the prompt—specifying that I’m looking for companies that are fully remote in 2025.

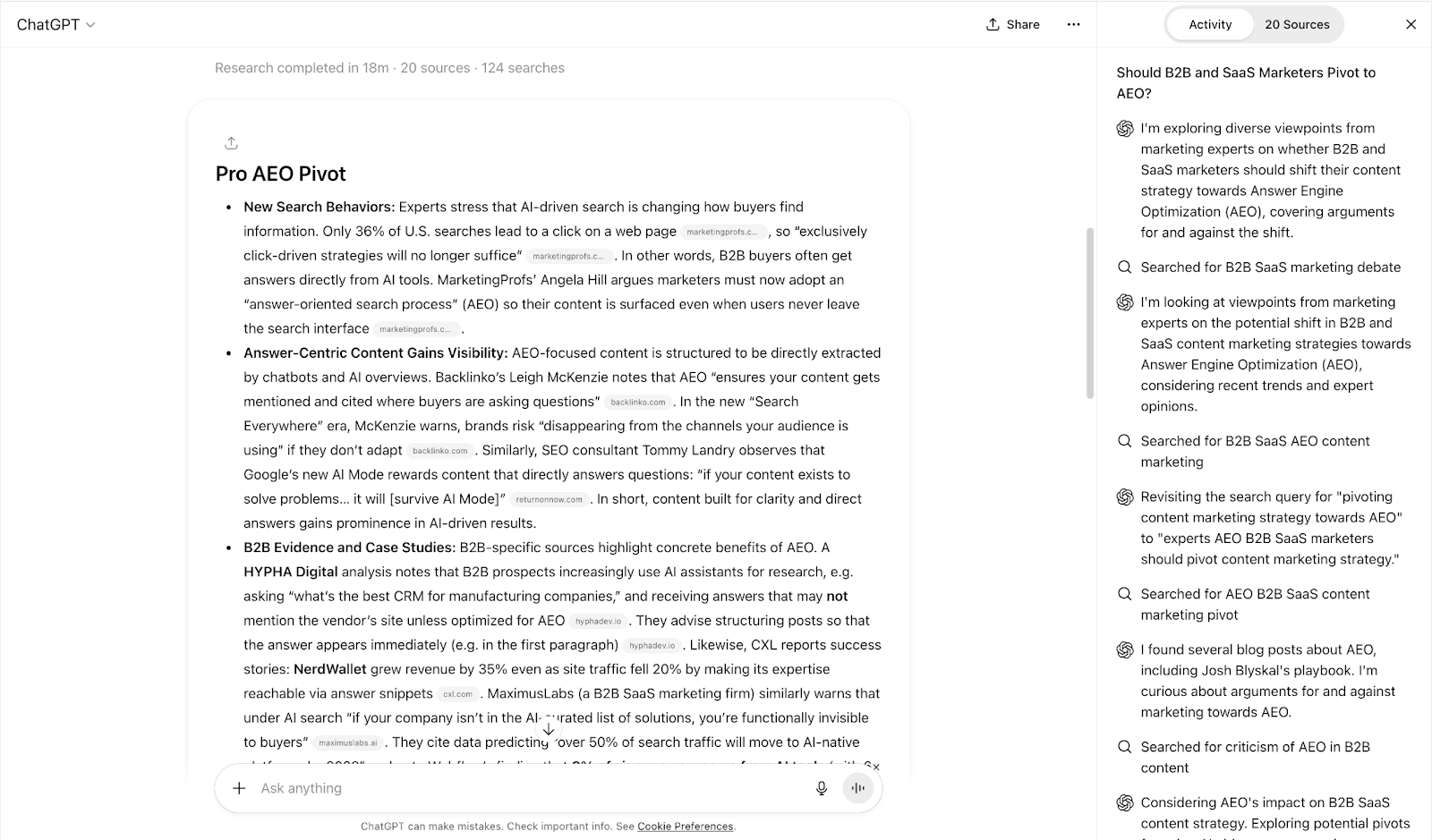

10: Dissect multiple perspectives on a controversial topic

Premise: AI has introduced Answer Engine Search (AEO) in the marketing world. I asked ChatGPT to help me understand the conflicting viewpoints on AEO so I can get the complete picture of how in-house marketers are dabbling with a new distribution channel. I asked the tool to dig through newsletters, articles, Reddit threads, and research papers.

Time taken: 18 minutes

Number of sources evaluated: 20 sources

Accuracy: The results were somewhat accurate. ChatGPT didn’t make up any links or statistics, but it did reference third-party sites that cited another research paper without an official link. I also found it missed quite a few prominent research papers from industry-leading companies (like Ahrefs or Semrush) and didn’t reference a single Reddit thread on the topic, which would have helped me understand public sentiment.

Overall result quality: I’d say the result quality in this experiment was okay, but there’s definitely scope for improvement.

For starters, deep research can use more reliable, recent sources and also dig through Reddit to give a more accurate picture of what people are saying beyond the reports.

I liked the pro-cons-balanced approach, though, which would help anyone reading get a decent picture of the current state of AEO.

Other observations and learnings: I think it’s not wise to club Reddit as a source with other sources in your deep research requests. I’ve noticed the tool misses that most of the time, but does an excellent job of highlighting relevant Reddit threads if asked for that singularly.

Other than that, I think it would’ve also helped if I had given a timeline of what people are saying about AEO from 2024 till now, to place a more recent constraint on the research reports.

3 best practices to help you get the best and the most accurate output out of deep research

After running these 10 experiments, here’s what I think you should do to ensure you get the most accurate and useful information from your deep research queries:

- Fact-check, always. The chances of AI hallucinations will decrease as AI tools improve, it’s true. But it’s not worth it to leave even the slightest chance for error. The good thing is ChatGPT always cites its sources—so you can easily verify if what it’s saying is true and relevant.

- Be as specific as possible. The more vague you are, the more the chances of AI tools sharing something that’s not entirely relevant or useful. Detailed prompts will provide much more accurate and helpful results.

- Don’t club too many queries in one prompt. It’s best to assign one major task per deep research query. The more tasks you add, the lower the quality of the AI tools' outputs. Plus, you can get much better results by splitting the query into two.